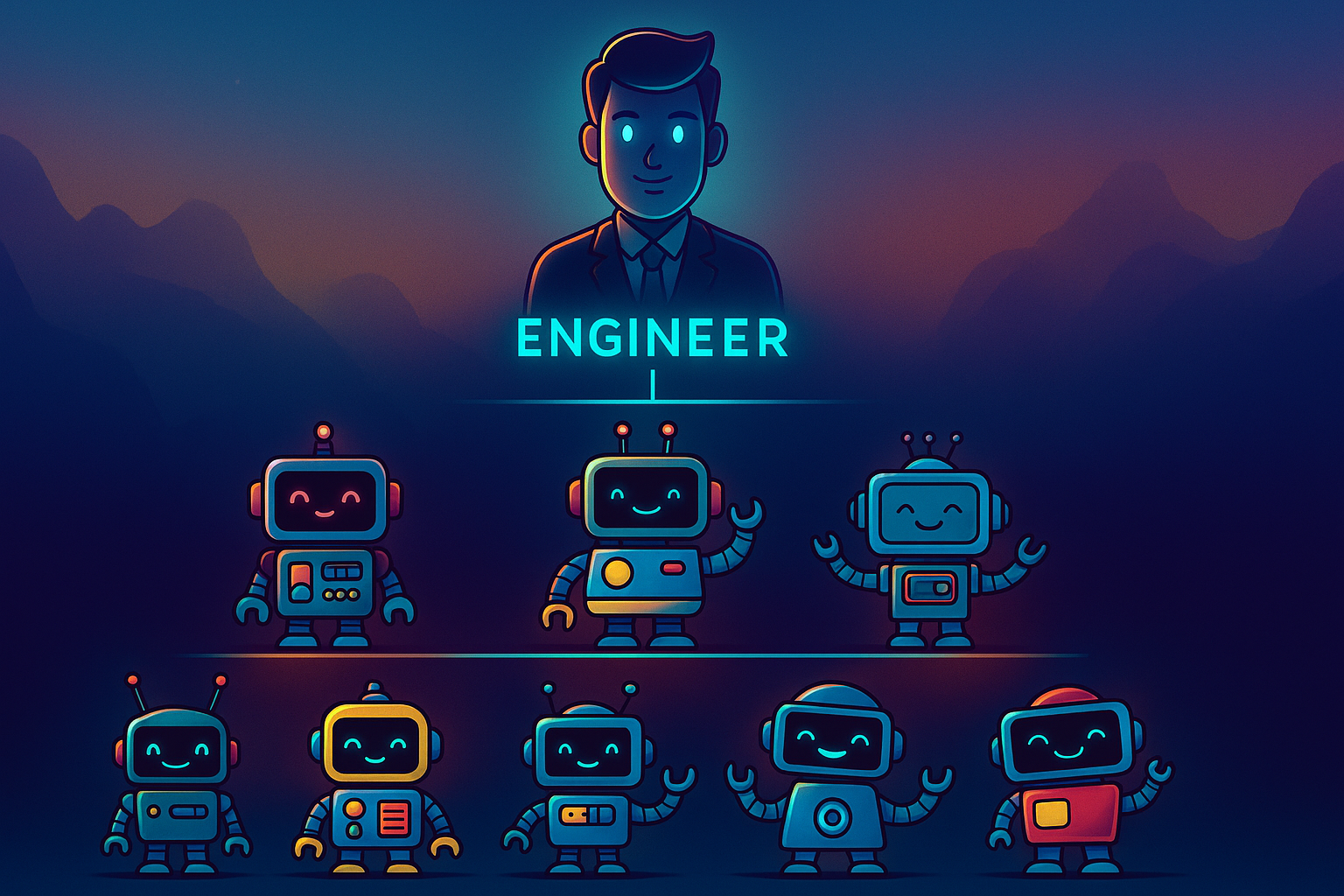

From Copilot to Agent: What It Takes to Build an AI-Native Engineering Org

Inside our journey from autocomplete copilots to autonomous agents—and the hard lessons we learned about documentation, developer experience, and speed.

TL;DR

AI-native engineering doesn’t start with better models.

It starts with rewriting your documentation for machines.

This post shares our journey at MadKudu as we transitioned from autocomplete copilots to agents that fix bugs, generate code from tickets, and ship features—backed by a culture that prioritizes speed, specs, and developer experience above all.

Why we're betting on agents

We’ve been saying internally: $1B in ARR with 1 employee isn’t a meme—it’s inevitable. And engineering is where that reality has to take root first.

The inspiration was everywhere (Devin, GitHub Copilot) but they still felt like sidekicks. When GPT-4o and LLaMA 3 dropped in May and June, things changed. We started running agents locally. Switched to Cursor. And when Cursor launched “Compose” (now Agent), it hit us:

We weren’t just completing code anymore. We were generating it from tickets.

But the shift didn’t come from a tool. It came from documentation.

What we actually built

Here’s how we moved from experimenting to deploying production agents:

-

Support agent (Nia)

Started as an obvious use case. Turned out to be a stress test for our documentation and exposed all its flaws. -

MCP agent

Parses Asana tickets, analyzes screenshots (GPT-4o), queries logs and metrics, writes responses and PRs. End-to-end execution. -

Cursor rules

Encoded both company-level and personal workflows into Cursor’s rule engine. This is becoming strategic IP. -

Engineering How-To system

Built a structured library to answer questions like “How do I pull usage data?” with copy-pasteable examples. Designed for LLMs, not humans. -

SpecStory

An internal spec format used to troubleshoot bugs with agents, especially when long chains of reasoning are needed. -

Knowledge base indexing

Our customer-facing docs are now available to Cursor. The agent understands what a “MadKudu Segment” or “Lead Fit” actually means. -

Fake monolith trick

We cloned all services into a central “Admin” repo. Cursor indexes it as if we were a monolith. It’s hacky but wildly effective.

What we learned (fast and hard)

Agents don’t struggle with code. They struggle with ambiguity.

Here’s what surfaced quickly:

-

Docs need to be AI-parseable

Use Mermaid instead of screenshots.

Minimize room for interpretation.

Assume your audience is a junior engineer with perfect recall and zero context. -

Prompt engineering is dead. Long live context engineering.

Giving agents the right context—via linked tickets, logs, specs, diagrams—is the real skill.Specs are the new code.

-

LLMs don’t follow runbooks

They improvise. We built a custom spec language for runbooks so agents stop coloring outside the lines. -

Tests are your last line of defense

Unit tests help. But integration and end-to-end tests are where the value compounds. Every agent commit needs a safety net. -

Developer velocity bottlenecks are exposed

When a bug fix takes 5 minutes, CI wait times feel unbearable. Spinning up local environments becomes critical path. -

Not everyone levels up

Some engineers doubled their output. Others stalled. The gap between Level 3 and Level 5 has never been more real.

What didn't work

Not everything clicked.

-

Thread loss in Cursor

Agents often ask to start a new thread mid-investigation, losing context. Our fix: agents now comment their interim results back in Asana so the next investigation doesn’t start from scratch. -

Nia v1 wasn’t grounded enough

Early versions hallucinated or failed silently when documentation lacked depth. We thought we could paper over gaps with clever prompts. We couldn’t. The agent surfaced every inconsistency in how we’d built and described the product. -

MCP had no “off ramp”

If a query failed or an image was unreadable, the agent would stall. We learned to give every agent a fallback: log the error, notify a human, or break the task down further.

Why it worked for us (when it didn't for others)

-

AI-native isn’t a project. It’s a mindset.

Everyone at MadKudu talks about AI. Every day. It’s okay to spend 4 hours learning a new agent tool—even if it slows you down short-term. -

We treated documentation like product

We rewrote everything (technical specs, product features, edge cases) not for humans but for agents. -

We had a head start

Our “MadMax” framework let us build agents fast. We didn’t have to wait for OpenAI or GitHub to ship the perfect wrapper.

Outcome: the new bar

Our PM is shipping bug fixes.

Not as a demo. Not with help.

Just… shipping.

That’s not a gimmick.

That’s the new bar.

Get more frameworks like this

Practical AI strategy for executives. No hype, just real playbooks.

SubscribeYou might also like